Tuesday, May 18, 2010

Static Analysis Worst Practices

We have been using static analysis for the last couple of years and I think I have uncovered a few worst practices that I wanted to share in hopes that others may avoid the mistakes we have made...

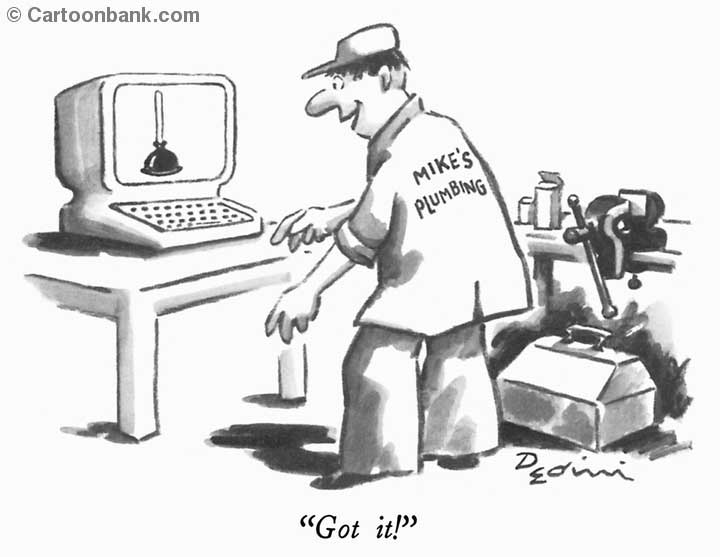

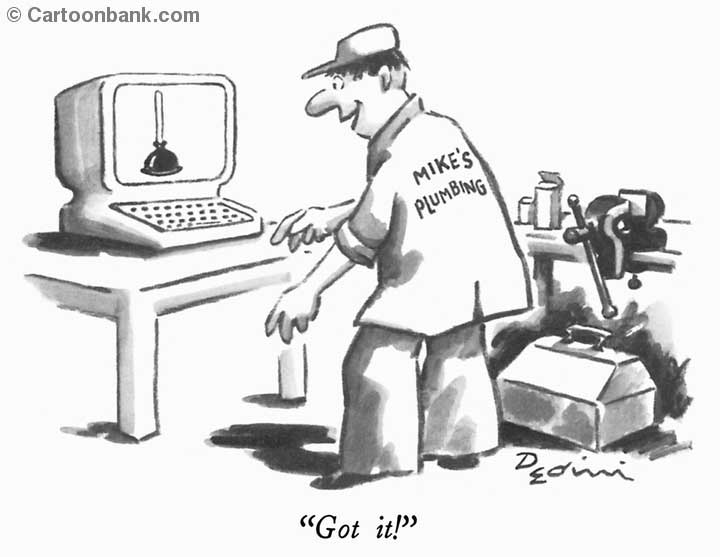

Many of the static analysis tools, whether Fortify Software, Ounce Labs, Coverity or others tend to be bought under the misguided belief that they automate the code review process. Reality states that these tools are great in terms of aiding software security professionals in finding defects, they aren't substitutes.

One of the biggest challenges in all of these tools is the amount of defects found by them. It takes human judgment in order to understand how bad a particular application may be in terms of its security posture above and beyond the metrics it produces. For example, the application with the highest amount of findings is actually pretty good in terms of its security posture and the application that has the least amount of findings is the one I would recommend a wholesale rewrite.

More importantly, the metrics produced by static analysis tools in terms of their dashboard do not even roughly correlate to what IT executives need in order to make decisions. For example, a security professional will want to make a decision based on various risk rating criteria ranging from difficulty of discovery and the skill required to exploit and these tools simply provide no visibility in this regard.

Security professionals tend to not just look at a one-off instance of a particular scan but may also study the longer term trends of an application. Think about what happens when Microsoft releases a patch for an exploit. They may want to go "backwards" and figure out what prior releases the exploit can take advantage of. The notion of backward-chaining and inferencing simply isn't built into most products.

Are you familiar with the concept of a buildforge where the goal is to automate how software is built in order to guarantee repeatable outcome? Many of the static analysis tools assume a developer is driving and not part of a larger process. As an agilist, I am a savage believer in the notion of frequent and automated software builds and static analysis puts a manual step into the mix.

How many dashboards does an enterprise need? Should I have a separate dashboard for static analysis tools? Should the findings be merged with those acquired from web vulnerability scanners? Should this information be rolled up into GRC tools? Should this information be incorporated into EA tools such as Troux and Alfabet such that an enterprise can do strategic planning on its portfolio where security is just one aspect of an application?

We need to have honest conversations on the strengths and limitations of static analysis. Otherwise it will result in another oversold enterprise tool and security posture of the enterprise will suffer...

| | View blog reactions

Many of the static analysis tools, whether Fortify Software, Ounce Labs, Coverity or others tend to be bought under the misguided belief that they automate the code review process. Reality states that these tools are great in terms of aiding software security professionals in finding defects, they aren't substitutes.

One of the biggest challenges in all of these tools is the amount of defects found by them. It takes human judgment in order to understand how bad a particular application may be in terms of its security posture above and beyond the metrics it produces. For example, the application with the highest amount of findings is actually pretty good in terms of its security posture and the application that has the least amount of findings is the one I would recommend a wholesale rewrite.

More importantly, the metrics produced by static analysis tools in terms of their dashboard do not even roughly correlate to what IT executives need in order to make decisions. For example, a security professional will want to make a decision based on various risk rating criteria ranging from difficulty of discovery and the skill required to exploit and these tools simply provide no visibility in this regard.

Security professionals tend to not just look at a one-off instance of a particular scan but may also study the longer term trends of an application. Think about what happens when Microsoft releases a patch for an exploit. They may want to go "backwards" and figure out what prior releases the exploit can take advantage of. The notion of backward-chaining and inferencing simply isn't built into most products.

Are you familiar with the concept of a buildforge where the goal is to automate how software is built in order to guarantee repeatable outcome? Many of the static analysis tools assume a developer is driving and not part of a larger process. As an agilist, I am a savage believer in the notion of frequent and automated software builds and static analysis puts a manual step into the mix.

How many dashboards does an enterprise need? Should I have a separate dashboard for static analysis tools? Should the findings be merged with those acquired from web vulnerability scanners? Should this information be rolled up into GRC tools? Should this information be incorporated into EA tools such as Troux and Alfabet such that an enterprise can do strategic planning on its portfolio where security is just one aspect of an application?

We need to have honest conversations on the strengths and limitations of static analysis. Otherwise it will result in another oversold enterprise tool and security posture of the enterprise will suffer...